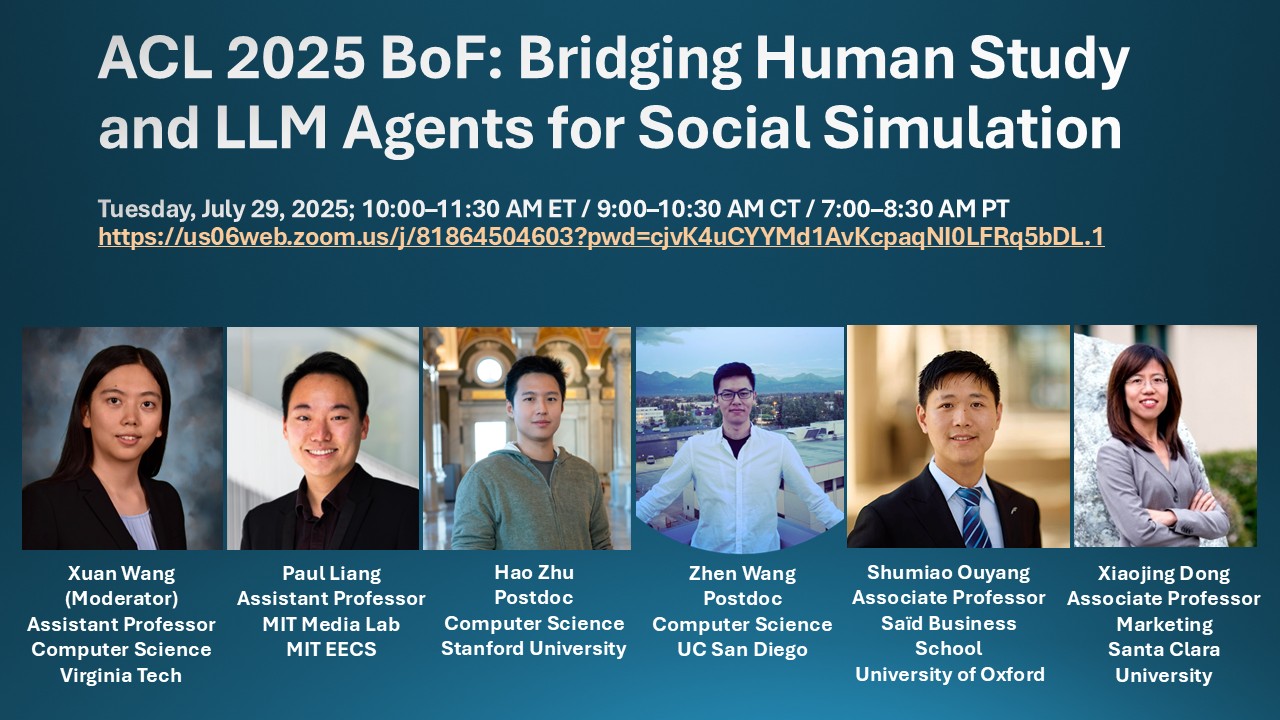

ACL 2025 BoF: Bridging Human Study and LLM Agents for Social Simulation

Date: Tuesday, July 29, 2025

Time: 16:00 - 17:30 PM in Vienna Time (10:00 – 11:30 AM ET / 9:00 – 10:30 AM CT / 7:00 – 8:30 AM PT in US Time)

Location: Online via Underline

Event Description

Recent advances in large language models (LLMs) have opened up new opportunities for simulating complex human behaviors and interactions at scale. These capabilities offer exciting potential for conducting large-scale social simulations, enabling researchers to explore hypotheses in social science, psychology, political science, and more. However, critical gaps remain between traditional empirical human studies and LLM-driven agent simulations. This BoF aims to bring together researchers across NLP, social sciences, HCI, and AI ethics to discuss key questions: How can we ensure that LLM agents faithfully represent human behaviors? What are the methodological, ethical, and technical challenges in integrating human data and LLM simulations? How can social simulations with LLMs complement or extend human subject research?

Pre-Event Survey

This 90-minute participant-driven session aims to foster meaningful discussion and collaboration across NLP, cognitive science, and computational social science communities. In addition to open discussion (welcoming everyone!), we are also looking for participants who might be interested in:

- Giving a short lightning talk during the BoF

- Collaborating on a potential position paper after event

- Helping organize a follow-up workshop (e.g., ACL 2026)

To help us prepare and coordinate, especially if you are interested in any of the above activities, please fill out this short survey: Pre-Event Survey. The survey deadline is Sunday, July 27, 2025.

Invited Speakers

We have put together a fantastic panel featuring an amazing lineup of speakers who are leaders in LLM agents and social studies. They will be sharing their insights and answering audience questions during the panel discussion.

- Xuan Wang (Moderator), Assistant Professor, Computer Science, Virginia Tech

- Paul Liang, Assistant Professor, MIT Media Lab, MIT EECS

- Hao Zhu, Postdoc, Computer Science, Stanford University

- Zhen Wang, Postdoc, Computer Science, UC San Diego

- Shumiao Ouyang, Associate Professor, Saïd Business School, University of Oxford

- Xiaojing Dong, Associate Professor, Marketing, Santa Clara University

We look forward to building this community and seeing you at ACL 2025!

Organizers

- Xuan Wang, Assistant Professor, Department of Computer Science, Virginia Tech

- Naren Ramakrishnan, Thomas L. Phillips Professor, Department of Computer Science, Virginia Tech

- Chris North, Professor, Department of Computer Science, Virginia Tech

- Le Wang, David M. Kohl Chair and Professor, Department of Agricultural and Applied Economics, Virginia Tech

- Yun Huang, Associate Professor, School of Information Sciences, University of Illinois at Urbana-Champaign

- Priya Pitre, PhD Student, Department of Computer Science, Virginia Tech

- Gaurav Srivastava, MS Student, Department of Computer Science, Virginia Tech